Obtaining device orientation and acceleration using built-in sensors

PUBLISHED

Introduction

The article will demonstrate how to use built-in sensors such as the gyroscope or accelerometer in Tizen Native applications. The topic presented here is based on the code of a sample game which was simplified in order to focus on a particular part of the Tizen Native API.

The article was created for developers who have basic knowledge of the C programming language. Developers reading this article should have basic knowledge about the Tizen native application life cycle as well.

The difference between the Tizen Native API and the web API is the possibility to set the sampling frequency of the sensor and receive the data containing accurate information about the time of measurement. These features are certainly useful for the implementation of many complex algorithms, such as motion and gesture detection.

Obtaining data from built-in sensors

In this section, we will show how to register a callback for the chosen sensors, as an example we have used the accelerometer. To use the Sensors API, the following header file has to be included:

#include <sensor.h>

A list of possible sensors is defined in the sensor_type_e enumerated type, which can be seen in the file. Most of the functions in the sensor API return an integer value, which corresponds to the appropriate value from type sensor_error_e.

Registering callbacks in their simplest form might look as follows:

#include <sensor.h>

[…]

#define ACCELEROMETER_INTERVAL_MS 20

[…]

typedef struct appdata {

/* some application data */

} appdata_s;

[…]

static int

register_accelerometer_callback(appdata_s *ad)

{

int error;

bool supported;

sensor_h accelerometer;

sensor_listener_h accelerationListener;

error = sensor_is_supported( SENSOR_ACCELEROMETER, &supported );

if(error != SENSOR_ERROR_NONE && supported){

return error;

}

error = sensor_get_default_sensor(SENSOR_ACCELEROMETER, &accelerometer);

if(error != SENSOR_ERROR_NONE){

return error;

}

error = sensor_create_listener( accelerometer, &accelerationListener);

if(error != SENSOR_ERROR_NONE){

return error;

}

error = sensor_listener_set_event_cb( accelerationListener,

ACCELEROMETER_INTERVAL_MS, accelerometer_cb, ad );

if(error != SENSOR_ERROR_NONE){

return error;

}

error = sensor_listener_start( accelerationListener );

return SENSOR_ERROR_NONE;

}

As you can see the sensor_listener_set_event_cb takes 3 arguments: an accelerometer listener handler, the time interval in milliseconds of calling callback and a pointer to data from your application which you want to use in the callback function.

static void

accelerometer_cb(sensor_h sensor, sensor_event_s *event, void *data){

appdata_s * ad = (appdata_s *)data;

[…]

/* Some game calculations like reflections from the edge,

calculation of current speed and resistance of motion.

Acceleration for each axis:

(float)event->values[0]

(float)event->values[1]

(float)event->values[2]

*/

}

Obtained event information has the following structure:

typedef struct

{

int accuracy;

unsigned long long timestamp;

int value_count;

float values[MAX_VALUE_SIZE];

} sensor_event_s;

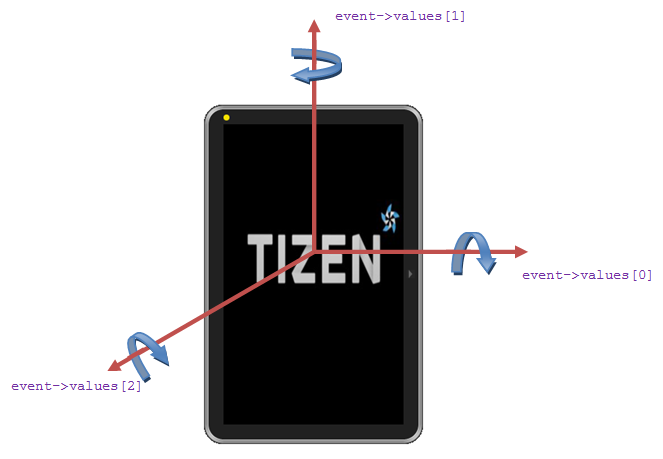

We can obtain 3 values from the accelerometer and gyroscope, one value per each of the spatial components. The particular values correspond to the axis as in the following scheme:

Figure 1 Location of the axis relative to the device.

The accuracy can take only a few values contained in the enumerated type sensor_data_accuracy_e.

If you need more information about the sensor, you can get it in the following way:

char *name; char *vendor; sensor_type_e type; float min_range; float max_range; float resolution; int min_interval; error = sensor_get_name(listener, &name); error = sensor_get_vendor(listener, &vendor); error = sensor_get_type(listener, &type); error = sensor_get_min_range(listener, &min_range); error = sensor_get_max_range(listener, &max_range); error = sensor_get_resolution(listener, &resolution); error = sensor_get_min_interval(listener, &min_interval);

Build user interface components

The sample application is a game where the ball is controlled using data from the accelerometer. The user must direct the ball into the hole, whose position has been randomly set. Moreover, we implemented elastic reflections from the edges of the screen in the sample application.

Figure 2 Screen of sample game.

Data about the state of the game is stored in the following structure.

typedef struct appdata {

Evas_Object *win;

Evas_Object *ball;

Evas_Object *hole;

/* ball velocity components */

float velocity_x;

float velocity_y;

} appdata_s;

static int screen_width;

static int screen_height;

Application wireframe was generated by creating a new Tizen Native Basic UI Application project in the SDK. In this way the created application consists only of the window and the sample control.

The library used to accompany Tizen Native API for creating graphics is EFL. It features a subset of the library called edje, which enables the separation of the UI layer from the rest of the code and into an *.edc file. Because there is no need to create a complex UI we will be using a subset of the library called Evas for showing images and changing their properties. This is one of the lower layers of the library responsible for drawing graphics. You can learn more about Evas here.

Objects are rendered in the order in which we added them to the parent object. Geometric transformations on objects don’t change this order, but it may be changed manually using the following functions:

void evas_object_stack_below(Evas_Object *obj, Evas_Object *below) void evas_object_stack_above(Evas_Object *obj, Evas_Object *above)

Background for the application window can be set in the following manner:

[…]

static void

create_base_gui(appdata_s *ad)

{

char buf[PATH_MAX];

[…]

Evas_Object *background = elm_bg_add(ad->win);

elm_win_resize_object_add(ad->win, background);

snprintf(buf, sizeof(buf), "%s/bg.png", app_get_resource_path());

elm_bg_file_set(background, buf, NULL);

evas_object_size_hint_weight_set(background, EVAS_HINT_EXPAND, EVAS_HINT_EXPAND);

[…]

evas_object_show(background);

}

[…]

Each evas objects was initialized in the create_base_gui() method which will be called by the application create callback. As you can see the background is of the Evas_Object type. To be visible we must execute the evas_object_show() function on it, despite the fact that it was performed on a parent object (window).

The following code is used to get the resolution of the device.

/* Get window dimensions */ elm_win_screen_size_get (ad->win, NULL, NULL, &screen_width, &screen_height);

The second and third parameters return horizontal and vertical pixel density. The information is not necessary for our game, so NULL values were passed.

The next step is adding the image of the hole and ball to the application window, and then positioning them.

static void

create_base_gui(appdata_s *ad)

{

char buf[PATH_MAX];

[…]

/* add ball to window */

ad->ball = evas_object_image_filled_add(evas_object_evas_get(ad->win));

snprintf(buf, sizeof(buf), "%s/ball.png", app_get_resource_path());

evas_object_image_file_set(ad->ball, buf, NULL);

evas_object_resize(ad->ball, BALL_DIAMETER, BALL_DIAMETER);

[…]

/* Moving the ball to the center of the screen */

evas_object_move(ad->ball,

screen_width /2 - BALL_DIAMETER/2,

screen_height/2 - BALL_DIAMETER/2);

[…]

evas_object_show(ad->ball);

}

The movement of the ball on the screen is realized by the evas_object_move() function after each call from the accelerometer callback. If you need more seamless animation, you can use the library ecore_animatior for which the description can be found here.

Summary

In this article you learned how to use sensors and retrieve their properties using the Native API. Furthermore, we have shown how to display an image using the efl library, which is responsible for displaying graphics in native applications. If you want to explore more opportunities of the efl library you should check out the documentation.

Was this document helpful?

We value your feedback. Please let us know what you think.