Audio I/O

Audio I/O enables your application to manage various media handles.

The main features of the Audio I/O API include:

-

Recording uncompressed audio

Enables you to capture uncompressed PCM data from audio device.

-

Playing uncompressed audio

Enables you to create a multimedia application to play uncompressed PCM data.

Recording Uncompressed Audio

The Pulse Code Modulated (PCM) data contains non-compressed audio. The Audio Input API (in mobile and wearable applications) enables your application to record such data from a microphone type input device.

Audio data is captured periodically, so to receive the audio PCM data from the input device, you must implement the audio input interface to notify the application of audio data events, such as the end of filling audio data.

Before recording audio, you must define the following PCM data settings:

- Input device type:

- Microphone

- Audio channels:

- Mono (1 channel)

- Stereo (2 channels)

- Audio sample type:

- Unsigned 8-bit PCM

- Signed 16-bit little endian PCM

- Audio sample rate:

- 8000 ~ 48000 Hz

To minimize the overhead of the audio input API, use the optimal channel type, sample type and sampling rate, which can be retrieved using the audio_in_get_channel(), audio_in_get_sample_type() and audio_in_get_sample_rate() functions, respectively.

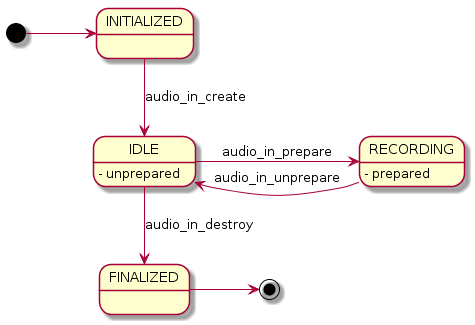

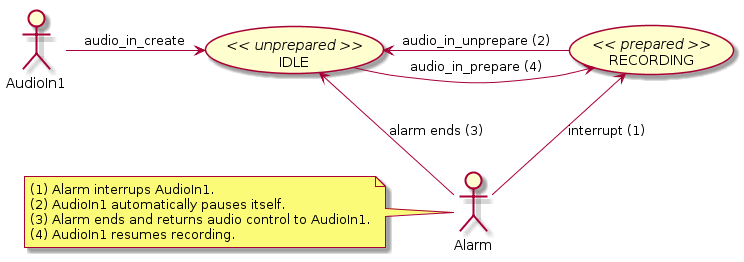

The following figures illustrate the general audio input states, and how the state changes when the audio input is interrupted by the system.

Figure: Audio input states

Figure: Audio input states when interrupted by system

Playing Uncompressed Audio

The Pulse Code Modulated (PCM) data contains non-compressed audio. The Audio Output API (in mobile and wearable applications) enables your application to play such data using output devices.

To play the audio PCM data, the application must call the audio_out_create() function to initialize the audio output handle.

Before playing audio, your application must define the following PCM data settings:

- Audio channels:

- Mono (1 channel)

- Stereo (2 channels)

- Audio sample type:

- Unsigned 8-bit PCM

- Signed 16-bit little endian PCM

- Audio sample rate:

- 8000 ~ 48000 Hz

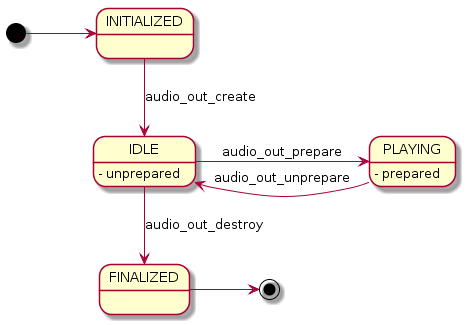

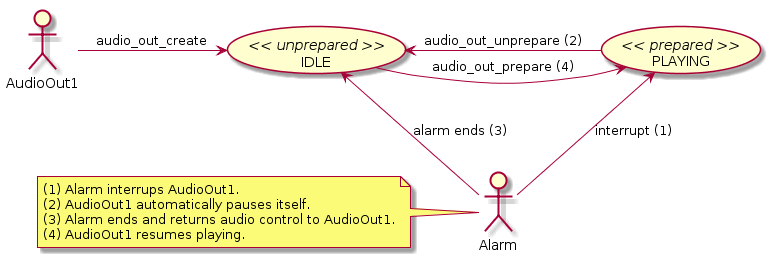

The following figures illustrate the general audio output states, and how the state changes when the audio output is interrupted by the system.

Figure: Audio output states

Figure: Audio output states when interrupted by system

Using Audio Output

For supporting various low-end Tizen devices, the application must follow certain guidelines:

- Do not use multiple instances of the Audio Output excessively.

Using excessive multiple instances of the Audio Output has a negative effect on the application, because the audio data processing for re-sampling and mixing imposes a heavy burden on the system.

- Use device-preferred PCM format.

To reduce the processing overhead inside, use the target device-preferred PCM format (for example, signed 16-bit little endian, stereo, 44100 Hz).

Using the preferred format reduces internal operations, such as converting audio samples from mono to stereo or re-sampling audio frequency to fit the target device's input sample rate.

- Do not call the Audio Output functions too frequently.

The Audio Output functions require more time while repeated in the order of audio_out_create() > audio_out_prepare() > audio_out_unprepare() > audio_out_destroy(). Therefore, keep the frequency of calling these functions to a minimum. Note that the audio_out_prepare() and audio_out_unprepare() functions are much faster than audio_out_create() and audio_out_destroy().

- Reduce event delay and remove glitches.

The Audio Output API works recursively with events. The smaller the buffer size, the more often are events generated. If you use the Audio Output API with the smallest buffer and other resources (for example, a timer or sensor), the application is negatively influenced by the delay of the event. To prevent problems, set the write buffer size properly based on the other resources you need.

To guarantee the working events of the Audio Output API independently, an instance of the Audio Output API needs to be created and worked on the event thread.

- Use double-buffering.

Use the double buffering mechanism to reduce latency. The mechanism works by first writing data twice before starting. After starting, whenever the listener (event) is called, you can write additional data.

- Save power.

If the Audio Output does not play for a long time for some reason, such as the screen turning off, or idle playback, call the audio_out_unprepare() function to pause the stream and save power. The device cannot go to sleep while in the PLAYING state.