Web Audio: Playing Audio Content

This tutorial demonstrates how you can play audio content using the Web audio.

This feature is supported in mobile applications only.

Warm-up

Become familiar with the Web Audio API basics by learning about:

-

Loading Source Data and Creating Audio Context

Modulate the source data into decoded audio data using XMLHttpRequest, and create an instance of AudioContext.

-

Using Modular Routing

Connect to a modular routing node after creating other nodes, such as source node, filter node, and gain node.

-

Playing Sound

Play sound by using the noteOn() method.

Loading Data and Creating Audio Context

To provide users with sophisticated audio features, you must learn to modulate source data into decoded audio data using XMLHttpRequest, and create an instance of the AudioContext interface:

- To load source audio data:

-

Load a source audio file using XMLHttpRequest. Set the responseType to arraybuffer to receive binary response:

<script> var url = "sample.mp3"; var request = new XMLHttpRequest(); request.open("GET", url, true); request.responseType = "arraybuffer"; request.send(); </script> -

The onload event is triggered. Retrieve the decoded audio data:

<script> /* Asynchronous event handling */ request.onload = function() { /* Put the modulated audio data into the audioData variable */ var audioData = request.response; }; </script>This data is used in the AudioBuffer.

-

- To create an audio context:

Create a WebKit-based AudioContext instance, which plays and manages the audio data:

<script> var context; context = new webkitAudioContext(); </script>

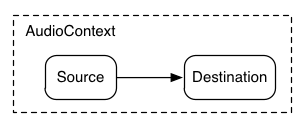

AudioContext instance supports various sound inputs, and it is possible to create an audio graph. You can create 1 or more sound sources to manage sound and connect to the sound destination.

The majority of the Web Audio API features, such as creating audio file data, decoding it, and creating AudioNodes are managed using the methods of the AudioContext interface.

- To create an audio buffer:

Create an AudioBuffer interface using the array buffer of audio data response attributes of the XMLHttpRequest() method. Select from the following options:

-

Create the audio buffer using the createBuffer() method:

<script> var context = new webkitAudioContext(); function setSound() { var url = "sample_audio.mp3"; var request = new XMLHttpRequest(); request.open("GET", url, true); request.responseType = "arraybuffer"; /* Asynchronous callback */ request.onload = function() { /* Create the sound source */ soundSource = context.createBufferSource(); soundBuffer = context.createBuffer(request.response, true); soundSource.buffer = soundBuffer; }; request.send(); } </script>The createBuffer() method is used as a synchronous decoding method to create an audio buffer of the required size.

-

Create the audio buffer using the decodeAudioData() method:

<script> var context = new webkitAudioContext(); function setSound() { var url = "sample_audio.mp3"; var request = new XMLHttpRequest(); request.open("GET", url, true); request.responseType = "arraybuffer"; /* Asynchronous callback */ request.onload = function() { /* Create the sound source */ soundSource = context.createBufferSource(); /* Import callback function that provides PCM audio data decoded as an audio buffer */ context.decodeAudioData(request.response, function(buffer) { bufferData = buffer; soundSource.buffer = bufferData; }, this.onDecodeError); }; request.send(); } </script>The decodeAudioData() method asynchronously decodes audio data from the XMLHttpRequest array buffer. Since this method does not prevent the execution of JavaScript threads, consider using it instead of the createBuffer() method.

To use an audio buffer created with the createBuffer() or decodeAudioData() method, the buffer must be allocated to the audioBufferSourceNode buffer.

-

Source Code

For the complete source code related to this use case, see the following file:

Using Modular Routing

To provide users with sophisticated audio features, you must learn to enable routing audio source data using AudioNode objects:

- To route to speaker output in a direct sound destination:

-

Create a WebKit-based AudioContext instance:

<script> var context; context = new webkitAudioContext();

-

Route a single audio source directly to the output:

var soundSource; function startSound(audioData) { soundSource = context.createBufferSource(); soundSource.buffer = soundBuffer; /* Direct routing to speaker */ soundSource.connect(volumeNode); volumeNode.connect(context.destination); } </script>All routing occurs within an AudioContext containing a single AudioDestinationNode.

-

-

To route to the sound destination using AudioNodes:

-

Create an AudioContext instance:

<script> var context; context = new webkitAudioContext();

-

Create the sound source:

function startSound(audioData) { soundSource = context.createBufferSource(); soundBuffer = context.createBuffer(audioData, true); soundSource.buffer = soundBuffer; volumeNode = context.createGainNode(); filter = context.createBiquadFilter(); audioAnalyser = context.createAnalyser(); -

Create the node to manage the output, for example, adjusting volume and applying filters:

soundSource.connect(volumeNode); volumeNode.connect(filter); filter.connect(audioAnalyser); audioAnalyser.connect(context.destination); } </script>

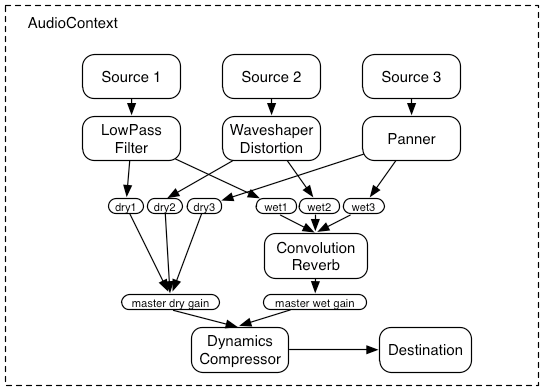

The figure below illustrates using 3 sources and a convolution reverb sent with a dynamics compressor at the final output stage.

-

AudioNodes can be used to activate sound effects, and create tweaks, audio parameters, and audio graphs using the GainNode interface, or filter sounds using the BiquadFilterNode interface.

Source Code

For the complete source code related to this use case, see the following file:

Playing Sound

To provide users with sophisticated audio features, you must learn to play sound:

-

Create a WebKit-based AudioContext instance:

<script> var context; context = new webkitAudioContext();

-

Play audio through direct sound destination using the noteOn() method:

function playSound() { var soundSource = context.createBufferSource(); soundSource.buffer = soundBuffer; soundSource.connect(context.destination); soundSource.noteOn(context.currentTime); } </script>Use time as parameter of the noteOn() method. Time is based on the currentTime property of the AudioContext, and expressed in seconds. If you set the value as '0', the playback begins immediately.

You can also use time as parameter of the noteOff() method. If you set the value as '0', the playback stops immediately.

The AudioContext instance digitally modulates the audio source file into audio data. After creating the sound source, playback is implemented by processing audio data using AudioNodes either directly to the speaker, or in the middle.

Source Code

For the complete source code related to this use case, see the following file: