Media App Sample Overview

The Media App sample demonstrates how to develop a media handling application with the ability to open, decode, and transform different media sources, such as video, images, and sound files.

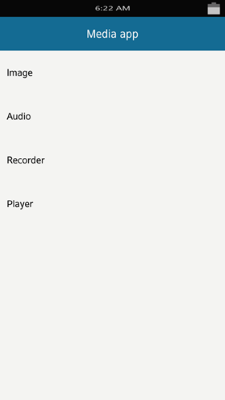

The following figure illustrates the starting screen of the Media App.

Figure: Media App main view

The purpose of this sample application is to demonstrate how to use the Media API to open, decode, and transform images. Each form contains appropriate UI components to enter the required data, such as phone number, message content, and buttons to send messages or emails.

This sample consists of the following:

- Image

-

Image Viewer

Decode images.

-

Image Converter

Convert images.

-

GIF Viewer

Open and play GIF images.

-

Flip

Flip and rotate images.

-

Image Resize

Resize images.

-

Color Converter

Convert images to pixel format.

-

Frame Extractor

Extract frames.

-

Image Viewer

- Audio

-

Tone Player

Play the keypad tone sounds

-

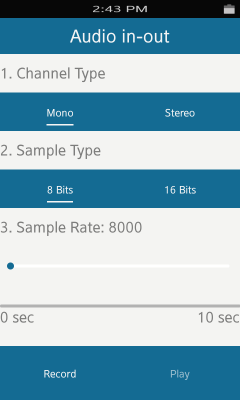

Audio In Out

Record and play audio.

-

Audio Equalizer

Control the equalizer.

-

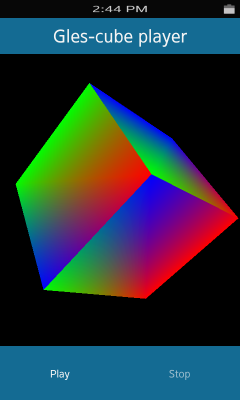

Gles Cube Player

Manage the OpenGL® functionality with audio streaming.

-

Tone Player

- Player

-

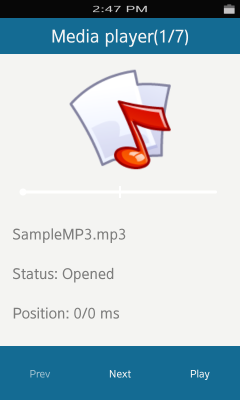

Media Player

Play media from different sources

-

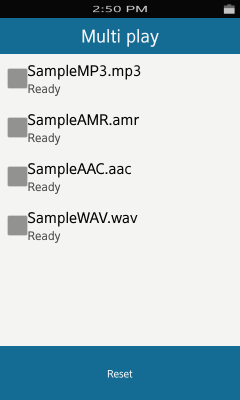

Multi Play

Play different kinds of media simultaneously.

-

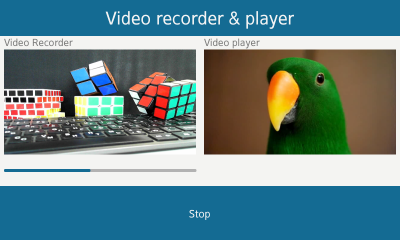

Video Recorder and Player

Manage camera recording and video player.

-

Media Player

- Recorder

-

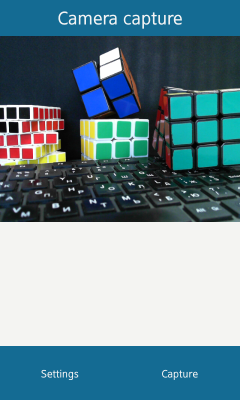

Camera Capture

Manage the camera frame capturing.

-

Video Recorder

Manage video recording.

-

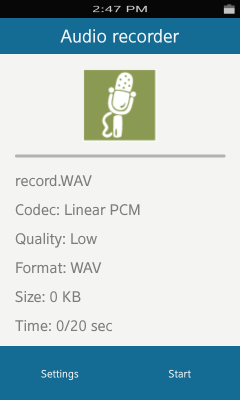

Audio Recorder

Manage audio recording.

-

Camera Capture

Image

Image Viewer

This view allows the user to decode, change the scale, and convert the pixel format of images and fit them to the display. Internally, when the resize slider is changed and released, the _slider_drag_stop_cb callback is called and the update_scale method is executed:

static void

_update_scale(image_viewer_view *view)

{

RETM_IF(NULL == view, "view is NULL");

Evas_Object *evas_image = elm_image_object_get(view->image);

double scale = elm_slider_value_get(view->slider) / DEFAULT_SCALE;

Evas_Coord_Rectangle new_geometry = {};

bool result = ic_calc_image_new_geometry(evas_image, view->image_scroller, scale, &new_geometry);

RETM_IF(!result, "error: on image new geometry calculation");

evas_object_image_fill_set(evas_image, 0, 0, new_geometry.w, new_geometry.h);

evas_object_resize(evas_image, new_geometry.w, new_geometry.h);

evas_object_move(evas_image, new_geometry.x, new_geometry.y);

_update_scale_factor_params(view);

}

Figure: Image viewer

Image Converter

This view allows the user to transform a source image into a new image with a different pixel format and size. Internally, when the Change pixel format button is clicked, the _change_format callback is called and the _process method executed:

int

image_sample_util_resize(const int src_w, const int src_h, const uchar *src,

const int dest_w, const int dest_h, uchar *dest)

{

if (src_w <= 0 || src_h <= 0 || !src || dest_w <= 0 || dest_h <= 0 || !dest)

{

return IMAGE_UTIL_ERROR_INVALID_PARAMETER;

}

int h = 0, w = 0;

float t, u, coef;

t = u = coef = 0.0;

float c1, c2, c3, c4;

c1 = c2 = c3 = c4 = 0.0;

u_int32_t pixel1, pixel2, pixel3, pixel4;

pixel1 = pixel2 = pixel3 = pixel4 = 0;

u_int32_t *pixel_res = NULL;

u_int32_t red, green, blue, alpha;

red = green = blue = alpha = 0;

int i = 0, j = 0;

for (j = 0; j < dest_h; j++)

{

coef = (float) (j) / (float) (dest_h - 1) * (src_h - 1);

h = (int) floor(coef);

if (h < 0)

{

h = 0;

}

else

{

if (h >= src_h - 1)

{

h = src_h - 2;

}

}

u = coef - h;

for (i = 0; i < dest_w; i++)

{

coef = (float) (i) / (float) (dest_w - 1) * (src_w - 1);

w = (int) floor(coef);

if (w < 0)

{

w = 0;

}

else

{

if (w >= src_w - 1)

{

w = src_w - 2;

}

}

t = coef - w;

c1 = (1 - t) * (1 - u);

c2 = t * (1 - u);

c3 = t * u;

c4 = (1 - t) * u;

pixel1 = *((u_int32_t*) (src + BRGA_BPP * (h * src_w + w)));

pixel2 = *((u_int32_t*) (src + BRGA_BPP * (h * src_w + w + 1)));

pixel3 = *((u_int32_t*) (src + BRGA_BPP * ((h + 1) * src_w + w + 1)));

pixel4 = *((u_int32_t*) (src + BRGA_BPP * ((h + 1) * src_w + w)));

blue = (uchar) pixel1 * c1 + (uchar) pixel2 * c2

+ (uchar) pixel3 * c3 + (uchar) pixel4 * c4;

green = (uchar) (pixel1 >> 8) * c1

+ (uchar) (pixel2 >> 8) * c2

+ (uchar) (pixel3 >> 8) * c3

+ (uchar) (pixel4 >> 8) * c4;

red = (uchar) (pixel1 >> 16) * c1

+ (uchar) (pixel2 >> 16) * c2

+ (uchar) (pixel3 >> 16) * c3

+ (uchar) (pixel4 >> 16) * c4;

alpha = (uchar) (pixel1 >> 24) * c1

+ (uchar) (pixel2 >> 24) * c2

+ (uchar) (pixel3 >> 24) * c3

+ (uchar) (pixel4 >> 24) * c4;

pixel_res = (u_int32_t*)(dest + BRGA_BPP * (i + j * dest_w));

*pixel_res = ((u_int32_t) alpha << 24) | ((u_int32_t) red << 16)

| ((u_int32_t) green << 8) | (blue);

}

}

return IMAGE_UTIL_ERROR_NONE;

}

static bool

_process(image_converter_view *view, double scale)

{

RETVM_IF(NULL == view, false, "view is NULL");

RETVM_IF(NULL == view->image_source, false, "view is NULL");

bool result = true;

int w = 0, h = 0;

Evas_Object* source_eo = elm_image_object_get(view->image_source);

RETVM_IF(NULL == source_eo, false, "evas_object image is NULL");

long long ticks_begin = get_ticks();

evas_object_image_size_get(source_eo, &w, &h);

RETVM_IF(0 == w * h, false, "wrong size of source image: %d x %d", w, h);

int dest_width = scale * w;

int dest_height = scale * h;

view->scale_perc = scale * SLIDER_MAX;

int error = IMAGE_UTIL_ERROR_NONE;

unsigned int dest_size = 0;

unsigned char* dest_buff = NULL;

void* src_buff = evas_object_image_data_get(source_eo, EINA_FALSE);

RETVM_IF(NULL == src_buff, false, "image_util_calculate_buffer_size error");

error = image_util_calculate_buffer_size(dest_width, dest_height, IMAGE_UTIL_COLORSPACE_BGRA8888, &dest_size);

RETVM_IF(IMAGE_UTIL_ERROR_NONE != error, false, "image_util_calculate_buffer_size error");

dest_buff = malloc(dest_size);

RETVM_IF(NULL == dest_buff, false, "malloc destination buffer error %d", dest_size);

error = image_sample_util_resize(w, h, src_buff, dest_width, dest_height, dest_buff);

if (IMAGE_UTIL_ERROR_NONE == error)

{

Evas_Object* im = evas_object_image_filled_add(evas_object_evas_get(view->layout));

if (im)

{

evas_object_image_colorspace_set(im, EVAS_COLORSPACE_ARGB8888);

evas_object_image_alpha_set(im, EINA_FALSE);

evas_object_image_size_set(im, dest_width, dest_height);

evas_object_image_data_copy_set(im, dest_buff);

char output_buf[PATH_MAX] = {'\0'};

snprintf(output_buf, PATH_MAX, "%s%s%s.%s", get_resource_path(""), IMAGE_OUTPUT_DIR, image_names[view->image_id],

image_file_format_to_str(converted_formats[view->convert_format_ind]));

if (EINA_FALSE == evas_object_image_save(im, output_buf, NULL, NULL))

{

ERR("evas_object_image_save error");

result = false;

}

else

{

strcpy(view->image_converted_uri, output_buf);

}

evas_object_del(im);

view->ticks = get_ticks() - ticks_begin;

}

}

else

{

INF("Image buffer manipulation: image_sample_util_resize error");

result = false;

}

free(dest_buff);

dest_buff = NULL;

return result;

}

Figure: Image converter

GIF Viewer

This view displays GIF images, and allows the user to change the pixel format. Internally, when the Next or Prev button is clicked, the _toolbar_button_clicked_cb callback is called and the _change_image method is executed:

static void

_change_image(gif_viewer_view *this, bool to_next)

{

RETM_IF(NULL == this, "this is NULL");

unsigned int image_id = this->image_id;

RETM_IF((to_next && image_id + 1 >= IMAGES_COUNT), "Out of image container");

RETM_IF((!to_next && image_id == 0), "Out of image container");

if (to_next)

{

++image_id;

}

else

{

--image_id;

}

if (!ic_load_image(this->image, image_file_names[image_id]))

{

ERR("ic_load_image failed");

}

ic_image_set_animated(this->image);

this->image_id = image_id;

_update_view_controls(this);

}

Figure: GIF viewer

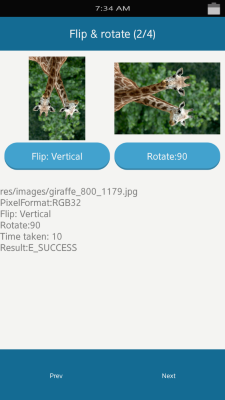

Flip

This view allows the user to flip and rotate images. Internally, when the Flip button is clicked, the _on_button_flip_clicked_cb callback is called:

static void

_on_button_rotate_clicked_cb(void *data, Evas_Object *obj, void *event_info)

{

RETM_IF(NULL == data, "data is NULL");

image_flip_rotate_view *view = data;

if (view->rotate_state + 1 < ROTATE_STATES_MAX)

{

++(view->rotate_state);

}

else

{

view->rotate_state = ROTATE_STATE_0;

}

elm_image_orient_set(view->image_rotate, _convert_to_elm_rotate_state(view->rotate_state));

_update_rotate_state(view);

_update_label(view);

}

Figure: Flip and rotate view

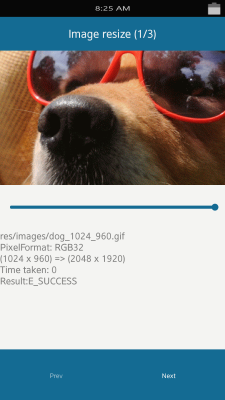

Image Resize

This view allows the user to change the image size. Internally, when the resize slider is changed, the _slider_drag_stop_cb callback is called and the _update_scale method is executed:

static void

_update_scale(image_resize_view *view)

{

RETM_IF(NULL == view, "view is NULL");

Evas_Object *evas_image = elm_image_object_get(view->image);

double scale = elm_slider_value_get(view->slider) / DEFAULT_SCALE;

Evas_Coord_Rectangle new_geometry = {};

bool result = ic_calc_image_new_geometry(evas_image, view->image_scroller, scale, &new_geometry);

RETM_IF(!result, "error: on image new geometry calculation");

evas_object_image_fill_set(evas_image, 0, 0, new_geometry.w, new_geometry.h);

evas_object_resize(evas_image, new_geometry.w, new_geometry.h);

evas_object_move(evas_image, new_geometry.x, new_geometry.y);

view->image_scaled_size.w = new_geometry.w;

view->image_scaled_size.h = new_geometry.h;

}

Figure: Image resize

Color Converter

This view allows the user to change the image pixel format (RGB32, RGB16, YUV420, or YUV422). Internally, when the Change pixel format button is clicked, the _toolbar_button_clicked_cb callback is called and the _process_colorspace_convert method is executed:

static bool

_process_colorspace_convert(color_converter_view *view)

{

long long time_begin = get_ticks();

RETVM_IF(NULL == view, false, "view is NULL");

RETVM_IF(NULL == view->image_source, false, "image is NULL");

Evas_Object* evas_image = elm_image_object_get(view->image_source);

RETVM_IF(NULL == evas_image, false, "evas image is NULL");

free(view->decoded_buffer);

view->decoded_buffer = NULL;

int w = 0, h = 0;

evas_object_image_size_get(evas_image, &w, &h);

RETVM_IF(0 == w * h, false, "wrong size of image: %d x %d", w, h);

void* src_buff = evas_object_image_data_get(evas_image, EINA_FALSE);

RETVM_IF(NULL == src_buff, false, "image source buffer is NULL");

image_util_colorspace_e src_colorspace = SOURCE_COLORSPACE;

image_util_colorspace_e dest_colorspace = colorspaces[view->color_id_to];

int error = IMAGE_UTIL_ERROR_NONE;

unsigned int dest_size = 0;

error = image_util_calculate_buffer_size(w, h, dest_colorspace, &dest_size);

RETVM_IF(IMAGE_UTIL_ERROR_NONE != error, false, "image_util_calculate_buffer_size error");

view->decoded_buffer = malloc(dest_size);

RETVM_IF(NULL == view->decoded_buffer, false, "malloc destination buffer error %d", dest_size);

error = image_sample_util_convert_colorspace(view->decoded_buffer, dest_colorspace, src_buff, w, h, src_colorspace);

RETVM_IF(IMAGE_UTIL_ERROR_NONE != error, false, "image_sample_util_convert_colorspace error");

view->decode_time = get_ticks() - time_begin;

return true;

}

Figure: Color converter

Frame Extractor

This view allows the user to extract frames from the input video stream. Internally, when the frame slider is changed, the _sliderbar_changed_cb callback is called and the frame_extractor_frame_get method is executed:

static inline rgb16

bgra_to_rgb(const bgra32 src)

{

rgb16 result = (rgb16)

(((src.r >> (RGB32_CHANEL_BIT_SIZE - RGB16_R_SIZE)) << RGB16_R_SHIFT)

| ((src.g >> (RGB32_CHANEL_BIT_SIZE - RGB16_G_SIZE)) << RGB16_G_SHIFT)

| ((src.b >> (RGB32_CHANEL_BIT_SIZE - RGB16_B_SIZE)) << RGB16_B_SHIFT));

return result;

}

static inline bgra32

rgb_to_bgra(const rgb16 src)

{

bgra32 result = {};

result.r = (uchar)(src >> RGB16_R_SHIFT) << (RGB32_CHANEL_BIT_SIZE - RGB16_R_SIZE);

result.g = (uchar)(src >> RGB16_G_SHIFT) << (RGB32_CHANEL_BIT_SIZE - RGB16_G_SIZE);

result.b = (uchar)(src >> RGB16_B_SHIFT) << (RGB32_CHANEL_BIT_SIZE - RGB16_B_SIZE);

result.a = RGB32_DEFAULT_ALPHA;

return result;

}

static uchar

clamp(int x)

{

if (x > 255)

{

x = 255;

}

else if (x < 0)

{

x = 0;

}

return x;

}

static inline uchar

bgra_to_yuv_y(const bgra32 src)

{

return ((66*src.r + 129*src.g + 25*src.b) >> 8) + 16;

}

static inline uchar

bgra_to_yuv_u(const bgra32 src)

{

return ((-38*src.r + -74*src.g + 112*src.b) >> 8) + 128;

}

static inline uchar

bgra_to_yuv_v(const bgra32 src)

{

return ((112*src.r + -94*src.g + -18*src.b) >> 8) + 128;

}

static inline uchar

yuv_to_r(const uchar yuv_y, const uchar yuv_u, const uchar yuv_v)

{

return clamp(yuv_y + 1.402 * (yuv_v - 128));

}

static inline uchar

yuv_to_g(const uchar yuv_y, const uchar yuv_u, const uchar yuv_v)

{

return clamp(yuv_y - 0.344 * (yuv_u - 128) - 0.714 * (yuv_v - 128));

}

static inline uchar

yuv_to_b(const uchar yuv_y, const uchar yuv_u, const uchar yuv_v)

{

return clamp(yuv_y + 1.772 * (yuv_u - 128));

}

static void

_convert_bgra8888_to_yuv420(uchar *dest, const uchar *src,

const int width, const int height,

const int d_size, const int s_size)

{

unsigned int src_stride = (s_size / height);

unsigned int pix_count = width * height;

unsigned int upos = pix_count;

unsigned int vpos = upos + upos / 4;

unsigned int y;

for (y = 0; y < height; ++y)

{

const bgra32* src_pixel = (const bgra32*) &(src[y * src_stride]);

unsigned int dest_line_pos = width * y;

if (!(y % 2))

{

unsigned int x;

for (x = 0; x < width; x += 2)

{

bgra32 pixel = src_pixel[x];

dest[dest_line_pos + x] = bgra_to_yuv_y(pixel);

dest[upos++] = bgra_to_yuv_u(pixel);

dest[vpos++] = bgra_to_yuv_v(pixel);

pixel = src_pixel[x + 1];

dest[dest_line_pos + x + 1] = bgra_to_yuv_y(pixel);

}

}

else

{

unsigned int x;

for (x = 0; x < width; ++x)

{

dest[dest_line_pos + x] = bgra_to_yuv_y(src_pixel[x]);

}

}

}

}

static void

_convert_bgra8888_to_rgb565(uchar *dest, const uchar *src,

const int width, const int height,

const int d_size, const int s_size)

{

unsigned int src_stride = (s_size / height);

unsigned int dest_stride = (d_size / height);

unsigned int y;

for (y = 0; y < height; ++y)

{

const bgra32* src_pixel = (const bgra32*) &(src[y * src_stride]);

rgb16* dest_pixel = (rgb16*) &(dest[y * dest_stride]);

unsigned int x;

for (x = 0; x < width; ++x)

{

dest_pixel[x] = bgra_to_rgb( src_pixel[x]);

}

}

}

static void

_convert_rgb565_to_yuv420(uchar *dest, const uchar *src,

const int width, const int height,

const int d_size, const int s_size)

{

unsigned int src_stride = (s_size / height);

unsigned int pix_count = width * height;

unsigned int upos = pix_count;

unsigned int vpos = upos + upos / 4;

unsigned int y;

for (y = 0; y < height; ++y)

{

const rgb16* src_pixel = (const rgb16*) &(src[y * src_stride]);

unsigned int dest_line_pos = width * y;

if (!(y % 2))

{

unsigned int x;

for (x = 0; x < width; x += 2)

{

bgra32 pixel = rgb_to_bgra(src_pixel[x]);

dest[dest_line_pos + x] = bgra_to_yuv_y(pixel);

dest[upos++] = bgra_to_yuv_u(pixel);

dest[vpos++] = bgra_to_yuv_v(pixel);

pixel = rgb_to_bgra(src_pixel[x + 1]);

dest[dest_line_pos + x + 1] = bgra_to_yuv_y(pixel);

}

}

else

{

unsigned int x;

for (x = 0; x < width; ++x)

{

bgra32 pixel = rgb_to_bgra(src_pixel[x]);

dest[dest_line_pos + x] = bgra_to_yuv_y(pixel);

}

}

}

}

static void

_convert_rgb565_to_bgra8888(uchar *dest, const uchar *src,

const int width, const int height,

const int d_size, const int s_size)

{

unsigned int src_stride = (s_size / height);

unsigned int dest_stride = (d_size / height);

unsigned int y;

for (y = 0; y < height; ++y)

{

const rgb16* src_pixel = (const rgb16*) &(src[y * src_stride]);

bgra32* dest_pixel = (bgra32*) &(dest[y * dest_stride]);

unsigned int x;

for (x = 0; x < width; ++x)

{

dest_pixel[x] = rgb_to_bgra(src_pixel[x]);

}

}

}

static void

_convert_yuv420_to_rgb565(uchar *dest, const uchar *src,

const int width, const int height,

const int d_size, const int s_size)

{

unsigned int pix_count = width * height;

unsigned int dest_stride = (d_size / height);

unsigned int y;

for (y = 0; y < height; y++)

{

rgb16* dest_pixel = (rgb16*) &(dest[y*dest_stride]);

unsigned int x;

for (x = 0; x < width; x++)

{

uchar yuv_y = src[ y*width + x];

uchar yuv_u = src[ (int)(pix_count + (y/2)*(width/2) + x/2)];

uchar yuv_v = src[ (int)(pix_count*1.25 + (y/2)*(width/2) + x/2)];

dest_pixel[x] =

((yuv_to_r(yuv_y, yuv_u, yuv_v) >> (RGB32_CHANEL_BIT_SIZE - RGB16_R_SIZE)) << RGB16_R_SHIFT)

| ((yuv_to_g(yuv_y, yuv_u, yuv_v) >> (RGB32_CHANEL_BIT_SIZE - RGB16_G_SIZE)) << RGB16_G_SHIFT)

| ((yuv_to_b(yuv_y, yuv_u, yuv_v) >> (RGB32_CHANEL_BIT_SIZE - RGB16_B_SIZE)) << RGB16_B_SHIFT);

}

}

}

static void

_convert_yuv420_to_bgra8888(uchar *dest, const uchar *src,

const int width, const int height,

const int d_size, const int s_size)

{

unsigned int pix_count = width * height;

unsigned int dest_stride = (d_size / height);

unsigned int y;

for (y = 0; y < height; y++)

{

bgra32* dest_pixel = (bgra32*) &(dest[dest_stride * y]);

unsigned int x;

for (x = 0; x < width; x++)

{

uchar yuv_y = src[ y*width + x];

uchar yuv_u = src[ (int)(pix_count + (y/2)*(width/2) + x/2)];

uchar yuv_v = src[ (int)(pix_count*1.25 + (y/2)*(width/2) + x/2)];

dest_pixel[x].r = yuv_to_r(yuv_y, yuv_u, yuv_v);

dest_pixel[x].g = yuv_to_g(yuv_y, yuv_u, yuv_v);

dest_pixel[x].b = yuv_to_b(yuv_y, yuv_u, yuv_v);

dest_pixel[x].a = RGB32_DEFAULT_ALPHA;

}

}

}

static void

_convert_yuv422_to_bgra8888(uchar *dest, const uchar *src,

const int width, const int height,

const int d_size, const int s_size)

{

unsigned int dest_stride = (d_size / height);

uchar yuv_u, yuv_v, yuv_y1, yuv_y2;

yuv_u = yuv_v = yuv_y1 = yuv_y2 = 0;

unsigned int y;

for (y = 0; y < height; y++)

{

bgra32* dest_pixel = (bgra32*) &(dest[dest_stride * y]);

const uchar * src_line = &(src[2 * y * width]);

unsigned int x;

for (x = 0; x < width; x += 2)

{

yuv_y1 = src_line[2 * x + 1];

yuv_y2 = src_line[2 * x + 3];

yuv_u = src_line[2 * x];

yuv_v = src_line[2 * x + 2];

dest_pixel[x].r = yuv_to_r(yuv_y1, yuv_u, yuv_v);

dest_pixel[x].g = yuv_to_g(yuv_y1, yuv_u, yuv_v);

dest_pixel[x].b = yuv_to_b(yuv_y1, yuv_u, yuv_v);

dest_pixel[x].a = RGB32_DEFAULT_ALPHA;

dest_pixel[x + 1].r = yuv_to_r(yuv_y2, yuv_u, yuv_v);

dest_pixel[x + 1].g = yuv_to_g(yuv_y2, yuv_u, yuv_v);

dest_pixel[x + 1].b = yuv_to_b(yuv_y2, yuv_u, yuv_v);

dest_pixel[x + 1].a = RGB32_DEFAULT_ALPHA;

}

}

}

static void

_convert_rgb888_to_bgra8888(uchar *dest, const uchar *src,

const int width, const int height,

const int d_size, const int s_size)

{

unsigned int src_stride = (s_size / height);

unsigned int dest_stride = (d_size / height);

unsigned int y;

for (y = 0; y < height; ++y)

{

const uchar* src_pixel = &(src[y * src_stride]);

bgra32* dest_pixel = (bgra32*) &(dest[y * dest_stride]);

unsigned int x;

for (x = 0; x < width; ++x)

{

dest_pixel[x].r = src_pixel[RGB24_BPP * x];

dest_pixel[x].g = src_pixel[RGB24_BPP * x + 1];

dest_pixel[x].b = src_pixel[RGB24_BPP * x + 2];

dest_pixel[x].a = RGB32_DEFAULT_ALPHA;

}

}

}

int

image_sample_util_convert_colorspace(uchar *dest, image_util_colorspace_e dest_colorspace,

const uchar *src, int width, int height,

image_util_colorspace_e src_colorspace)

{

RETVM_IF(NULL == src, IMAGE_UTIL_ERROR_INVALID_PARAMETER, "source buffer in NULL");

RETVM_IF(NULL == dest, IMAGE_UTIL_ERROR_INVALID_PARAMETER, "destination buffer in NULL");

RETVM_IF(width <= 0 || height <= 0, IMAGE_UTIL_ERROR_INVALID_PARAMETER, "width or heightis incorrect");

int error = IMAGE_UTIL_ERROR_NONE;

if (IMAGE_UTIL_COLORSPACE_BGRA8888 != src_colorspace

&& IMAGE_UTIL_COLORSPACE_RGB565 != src_colorspace

&& IMAGE_UTIL_COLORSPACE_I420 != src_colorspace

&& IMAGE_UTIL_COLORSPACE_UYVY != src_colorspace

&& IMAGE_UTIL_COLORSPACE_RGB888 != src_colorspace)

{

ERR("src_colorspace not supported yet");

return IMAGE_UTIL_ERROR_NOT_SUPPORTED_FORMAT;

}

if (IMAGE_UTIL_COLORSPACE_BGRA8888 != dest_colorspace

&& IMAGE_UTIL_COLORSPACE_RGB565 != dest_colorspace

&& IMAGE_UTIL_COLORSPACE_I420 != dest_colorspace)

{

ERR("dest_colorspace not supported yet");

return IMAGE_UTIL_ERROR_NOT_SUPPORTED_FORMAT;

}

unsigned int dest_size = 0;

error = image_util_calculate_buffer_size(width, height, dest_colorspace, &dest_size);

RETVM_IF(IMAGE_UTIL_ERROR_NONE != error, error, "image_util_calculate_buffer_size error");

unsigned int src_size = 0;

error = image_util_calculate_buffer_size(width, height, src_colorspace, &src_size);

RETVM_IF(IMAGE_UTIL_ERROR_NONE != error, error, "image_util_calculate_buffer_size error");

if (dest_colorspace == src_colorspace)

{

memcpy(dest, src, src_size);

return error;

}

if (IMAGE_UTIL_COLORSPACE_BGRA8888 == src_colorspace)

{

if (IMAGE_UTIL_COLORSPACE_I420 == dest_colorspace)

{

_convert_bgra8888_to_yuv420(dest, src, width, height, dest_size, src_size);

}

else

{

_convert_bgra8888_to_rgb565(dest, src, width, height, dest_size, src_size);

}

}

else if (IMAGE_UTIL_COLORSPACE_RGB565 == src_colorspace)

{

if (IMAGE_UTIL_COLORSPACE_I420 == dest_colorspace)

{

_convert_rgb565_to_yuv420(dest, src, width, height, dest_size, src_size);

}

else

{

_convert_rgb565_to_bgra8888(dest, src, width, height, dest_size, src_size);

}

}

else if (IMAGE_UTIL_COLORSPACE_I420 == src_colorspace)

{

if (IMAGE_UTIL_COLORSPACE_RGB565 == dest_colorspace)

{

_convert_yuv420_to_rgb565(dest, src, width, height, dest_size, src_size);

}

else

{

_convert_yuv420_to_bgra8888(dest, src, width, height, dest_size, src_size);

}

}

else if (IMAGE_UTIL_COLORSPACE_UYVY == src_colorspace)

{

if (IMAGE_UTIL_COLORSPACE_BGRA8888 == dest_colorspace)

{

_convert_yuv422_to_bgra8888(dest, src, width, height, dest_size, src_size);

}

else

{

ERR("dest_colorspace not supported yet");

return IMAGE_UTIL_ERROR_NOT_SUPPORTED_FORMAT;

}

}

else

{

if (IMAGE_UTIL_COLORSPACE_BGRA8888 == dest_colorspace)

{

_convert_rgb888_to_bgra8888(dest, src, width, height, dest_size, src_size);

}

else

{

ERR("dest_colorspace not supported yet");

return IMAGE_UTIL_ERROR_NOT_SUPPORTED_FORMAT;

}

}

return error;

}

bool

frame_extractor_frame_get(const frame_extractor *extractor, unsigned char **frame, int pos)

{

bool result = false;

if (extractor && frame)

{

void * buf = NULL;

int size = 0;

int error = METADATA_EXTRACTOR_ERROR_NONE;

error = metadata_extractor_get_frame_at_time(extractor->metadata, pos, false, &buf, &size);

if (METADATA_EXTRACTOR_ERROR_NONE == error && buf)

{

*frame = malloc(size + (extractor->width * extractor->height) * ARGB_PIXEL_SIZE);

if (*frame)

{

error = image_sample_util_convert_colorspace(*frame, IMAGE_UTIL_COLORSPACE_BGRA8888, buf,

extractor->width, extractor->height,

IMAGE_UTIL_COLORSPACE_RGB888);

if (IMAGE_UTIL_ERROR_NONE == error)

{

result = true;

}

else

{

free(*frame);

*frame = NULL;

}

}

free(buf);

}

}

return result;

}

Figure: Frame Extractor

Audio

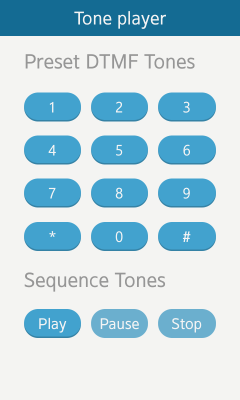

Tone Player

This view allows the user to play tone sounds from the numeric phone keypad. Internally, when any phone keypad button is clicked, the _on_keypad_btn_down_cb and _on_keypad_btn_up_cb callbacks are called and the _process method is executed:

static void

_on_keypad_btn_down_cb(void *data, Evas *e, Evas_Object *obj, void *event_info)

{

tone_player_view *view = data;

if (view)

{

tone_player_stop(view->tone_handle);

tone_type_e tone = (tone_type_e)evas_object_data_get(obj, BUTTON_DTMF_KEY);

tone_player_start(tone, SOUND_TYPE_MEDIA, MAX_TONE_DURATION, &iew-gt;tone_handle);

}

}

Figure: Tone player

static void

_on_keypad_btn_up_cb(void *data, Evas *e, Evas_Object *obj, void *event_info)

{

tone_player_view *view = data;

if (view)

{

tone_player_stop(view->tone_handle);

}

}

Audio In Out

This view allows the user to record sounds with the microphone and play them. Additionally there are options to adjust the channel type and sample rate. Internally, when the Record button is clicked, the _audio_in_start_cb callback is called, which prepares the audio stream to be recorded and starts it in a separate thread.

Figure: Audio in-out

static void

_audio_in_start(audio_inout *obj)

{

audio_buffer *buf = obj->buffer;

char *samples = buf->samples;

int len = 0;

size_t read_bytes = 0;

size_t last_reported_bytes = 0;

do

{

len = AUDIO_BUFFER_SIZE;

if (buf->len + len > buf->capacity)

{

len = buf->capacity - buf->len;

}

if (len > 0)

{

int bytes = audio_in_read((audio_in_h)obj->handle, samples, len);

assert(bytes >= 0);

if (bytes)

{

samples += bytes;

buf->len += bytes;

read_bytes += bytes;

if (read_bytes - last_reported_bytes >= obj->byte_per_sec * PROGRESS_UPD_PERIOD)

{

_report_progress(obj, read_bytes);

last_reported_bytes = read_bytes;

}

}

}

} while(!obj->stop && len > 0);

_report_progress(obj, read_bytes);

}

When input audio is recorded, it can be played in a separate stream in the _audio_out_start method.

Audio Equalizer

This view allows the user to play an altered frequency response of an audio stream using linear filters. Additionally, there are several pre-defined values to play audio streams. Internally, when the Play button is clicked, the _start_player callback is called, which prepares the audio equalizer layout and applies it to a stream.

Figure: Audio equalizer

static void

_set_equalizer_layout(equalizer_view *view, Evas_Object *parent)

{

Evas_Object *box = elm_box_add(parent);

elm_box_horizontal_set(box, EINA_FALSE);

evas_object_size_hint_weight_set(box, EVAS_HINT_EXPAND, EVAS_HINT_EXPAND);

evas_object_size_hint_align_set(box, EVAS_HINT_FILL, EVAS_HINT_FILL);

evas_object_show(box);

int bands_count = 0;

player_audio_effect_get_equalizer_bands_count(view->player, &bands_count);

view->bands_count = bands_count;

view->sliders = calloc(bands_count, sizeof(Evas_Object*));

for (int i = 0; i < bands_count; ++i)

{

int frequency = 0;

int min = 0;

int max = 0;

player_audio_effect_get_equalizer_band_frequency(view->player, i, &frequency);

player_audio_effect_get_equalizer_level_range(view->player, &min, &max);

Evas_Object *item = _add_band_item(view, box, i, frequency, min, max);

elm_box_pack_end(box, item);

}

elm_layout_content_set(parent, "band_content", box);

}

Gles Cube Player

This view demonstrates the usage of the OpenGL® library together with the audio output stream.

Figure: Gles cube player

Initialize and render openGL:

void

_gles_cube_view_glview_init(Evas_Object *obj)

{

gles_cube_view_data *view = evas_object_data_get(obj, GLVIEW_VIEW_DATA_NAME);

RETM_IF(!view, "View data is NULL");

Evas_GL_API *api = elm_glview_gl_api_get(obj);

RETM_IF(!api, "GL API is NULL");

view->shader_ok = color_shader_init(&view->shader, api);

RETM_IF(!view->shader_ok, "Shader initialization failed");

api->glClearColor(VIEW_BG_COLOR);

api->glEnable(GL_DEPTH_TEST);

}

void

_gles_cube_view_glview_render(Evas_Object *obj)

{

gles_cube_view_data *view = evas_object_data_get(obj, GLVIEW_VIEW_DATA_NAME);

RETM_IF(!view, "View data is NULL");

RETM_IF(!view->resize_ok, "Viewport is not ready");

Evas_GL_API *api = elm_glview_gl_api_get(obj);

RETM_IF(!api, "GL API is NULL");

gl_matrix4 matrix;

gl_matrix4_load_identity(&matrix);

gl_matrix4_translate(&matrix, CUBE_XYZ_POSITION);

gl_matrix4_rotate(&matrix, view->angle, CUBE_ROTATE_VECTOR);

api->glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

color_shader_activate(&view->shader, api);

color_shader_load_mv(&view->shader, api, &matrix);

color_object3d_draw(&CUBE_OBJECT3D, api);

color_shader_deactivate(&view->shader, api);

}

Player

Media Player

This view allows the user to play different media sources, such as MP3, AMR, WAV, MP4, and AAC. Internally, when the Play button is clicked, the _start_player callback is called, which prepares the selected media and plays it.

static void

_start_player(media_player_view *view)

{

player_state_e state;

player_get_state(view->player, &state);

if (state != PLAYER_STATE_PLAYING)

{

player_start(view->player);

if (_is_playing(view))

{

_toolbar_play_stop_btn_upd(view);

elm_object_disabled_set(view->progressbar, EINA_FALSE);

int ms = 0;

player_get_duration(view->player, &ms);

elm_slider_min_max_set(view->progressbar, 0, ms / 1000);

elm_slider_value_set(view->progressbar, 0.0);

_label_status_set(view, "Playing");

}

}

if (_is_playing(view))

{

_timer_start(view);

}

}

Figure: Media Player

Multi Play

This view allows the user to play several audio sources simultaneously, such as MP3, AMR, AAC, and WAV. Internally, when each checkbox is checked, a separate player with the selected stream is started.

Figure: Multi Play

static void

_on_genlist_item_selected_cb(void *data, Evas_Object *obj, void *event_info)

{

Elm_Object_Item *item = (Elm_Object_Item *) event_info;

player_data *player = elm_object_item_data_get(item);

Evas_Object *checkbox = elm_object_item_part_content_get(item, CHECKBOX_PART);

elm_genlist_item_selected_set(item, EINA_FALSE);

assert(checkbox);

assert(player);

if (checkbox && player)

{

bool state = elm_check_state_get(checkbox);

elm_check_state_set(checkbox, !state);

state = elm_check_state_get(checkbox);

if (state)

{

_player_start(player);

}

else

{

_player_stop(player);

}

elm_genlist_item_fields_update(item, SUBTEXT_PART, ELM_GENLIST_ITEM_FIELD_TEXT);

}

}

Video Recorder and Player

This view allows the user to record camera streaming and play another video stream at the same time. Initially, both the camera recorder and the player should be created and initiated:

static void

_create_video_recorder(video_recorder_player_view *view)

{

window_obj *win_obj = view->app->win;

Evas_Object *win = win_obj->win;

if (view->camera)

{

camera_destroy(view->camera);

}

if (camera_create(CAMERA_DEVICE_CAMERA0, &view->camera) == CAMERA_ERROR_NONE)

{

// ... skip some code

recorder_create_videorecorder(view->camera, &view->recorder);

if (view->recorder)

{

recorder_set_file_format(view->recorder, RECORDER_FILE_FORMAT_3GP);

recorder_set_filename(view->recorder, get_data_path(RECORDERED_3GP_VIDEO));

recorder_attr_set_time_limit(view->recorder, limit_seconds);

recorder_set_recording_limit_reached_cb(view->recorder, _limit_reached_cb, view);

recorder_set_recording_status_cb(view->recorder, _record_status_cb, view);

recorder_prepare(view->recorder);

}

}

}

Figure: Video recorder and player

When the Start button is clicked, recording a video stream to a file and playing another video stream at the same time begins. The recorded file is played after the recording is finished.

static void

_stop_video_recorder(video_recorder_player_view *view)

{

if (view->recorder)

{

recorder_commit(view->recorder);

recorder_unprepare(view->recorder);

recorder_destroy(view->recorder);

view->recorder = NULL;

elm_progressbar_value_set(view->progress_bar, 0.0);

_on_record_completed(view);

}

}

static void _on_record_completed(video_recorder_player_view *view)

{

win_set_view_style(view->app->win, STYLE_DEFAULT_WINDOW);

Elm_Object_Item *video_player_navi_item = video_player_view_add(view->app, view->navi,

get_data_path(RECORDERED_3GP_VIDEO), NULL, NULL);

elm_naviframe_item_pop_cb_set(video_player_navi_item, _video_player_navi_item_pop_cb, view);

}

Recorder

Camera Capture

This view allows the user to capture a separate frame from the camera stream as an image and display it in the saved images. There are numerous settings that can be used to adjust the capturing process and to customize the camera stream style, quality, and filtering. Initially, the camera view should be prepared and started:

static void

_create_camera(camera_capture_view *view)

{

window_obj *win_obj = view->app->win;

Evas_Object *win = win_obj->win;

if (view->camera)

{

_destroy_camera(view);

}

if (camera_create(CAMERA_DEVICE_CAMERA0, &view->camera) == CAMERA_ERROR_NONE)

{

int x = 0, y = 0, w = 0, h = 0;

Evas_Object *edje_layout = elm_layout_edje_get(view->layout);

edje_object_calc_force(edje_layout);

_get_geometry_camera_win(view, &x, &y, &w, &h);

...

}

else

{

view->camera = NULL;

}

}

static void _start_camera(camera_capture_view *view)

{

if (view->camera)

{

camera_start_preview(view->camera);

}

}

When the Capture button is clicked, it takes a frame shot of the current camera view and places it into a scrollable gallery down the view.

static void

_on_camera_capture_cb(camera_image_data_s *image, camera_image_data_s *postview,

camera_image_data_s *thumbnail, void *user_data)

{

camera_capture_view *view = user_data;

if (view)

{

char *image_path = _save_file(view, image);

if (image_path)

{

thumbnail_data *mlt_data = calloc(1, sizeof(thumbnail_data));

mlt_data->view = view;

mlt_data->image_path = image_path;

ecore_main_loop_thread_safe_call_async(_on_ecore_main_loop_cb, mlt_data);

}

}

}

Each newly created frame can be viewed with the image viewer:

static void

_on_thumb_clicked_cb(void *data, Evas_Object *obj, void *event_info)

{

thumbnail_data *thumbnail = data;

if (thumbnail)

{

if (capture_image_viewer_add(thumbnail->view->app, thumbnail->view->navi,

thumbnail->image_path, _on_capture_image_viewer_del_cb, thumbnail->view))

{

thumbnail->view->activated = false;

_stop_camera(thumbnail->view);

}

}

}

Figure: Camera Capture

Video Recorder

This view allows the user to record camera streaming and play the recorded video. There are numerous settings that can be used to adjust recording process and to customize the camera streaming style, quality, and filtering. Initially, the video recorder should be created and prepared:

Figure: Video recorder

static void

_create_video_recorder(video_recorder_view *view)

{

if (view->recorder)

{

_destroy_video_recorder(view);

}

recorder_create_videorecorder(view->camera, &view->recorder);

if (view->recorder)

{

_recorder_file_format_set(view, RECORDER_FILE_FORMAT_3GP);

recorder_attr_set_time_limit(view->recorder, limit_seconds);

recorder_set_recording_limit_reached_cb(view->recorder, _limit_reached_cb, view);

recorder_set_recording_status_cb(view->recorder, _record_status_cb, view);

recorder_prepare(view->recorder);

}

}

When the Start button is clicked, it starts to record a video. The recorded video can be played in the Media player.

static void

_start_video_recorder(video_recorder_view *view)

{

if (view->recorder)

{

recorder_start(view->recorder);

}

}

Audio Recorder

This view allows the user to record audio streaming and play the recorded audio. There are several settings that can be used to adjust audio quality and pre-processing. Initially, the audio recorder should be created and prepared:

static void

_recorder_create(audio_recorder_view *view)

{

if (recorder_create_audiorecorder(&view->recorder) == RECORDER_ERROR_NONE)

{

_get_supported_codec_list(view);

if (view->codec_list_len)

{

_recorder_codec_set(view, view->codec_list[0]);

}

else

{

_recorder_codec_set(view, RECORDER_AUDIO_CODEC_PCM);

}

_recorder_quality_set(view, AQ_MEDIUM);

recorder_set_recording_status_cb(view->recorder, _on_recording_status_cb, view);

recorder_set_recording_limit_reached_cb(view->recorder, _on_recording_limit_reached_cb, view);

recorder_attr_set_audio_channel(view->recorder, 1);

recorder_attr_set_audio_device(view->recorder, RECORDER_AUDIO_DEVICE_MIC);

recorder_attr_set_time_limit(view->recorder, MAX_TIME);

_label_size_set(view, 0);

_label_time_set(view, 0);

}

}

Figure: Audio recorder

When the Start button is clicked, it starts to record the audio stream. The recorded audio can be played in the Media player.

static void

_recorder_start(audio_recorder_view *view)

{

if (view->recorder)

{

recorder_prepare(view->recorder);

recorder_start(view->recorder);

if (_recorder_is_recording(view))

{

elm_object_item_text_set(view->ctrl_item, STR_STOP);

}

}

}

static void

_recorder_stop(audio_recorder_view *view)

{

if (view->recorder)

{

recorder_commit(view->recorder);

if (!_recorder_is_recording(view))

{

elm_object_item_text_set(view->ctrl_item, STR_START);

recorder_unprepare(view->recorder);

_label_size_set(view, 0);

_label_time_set(view, 0);

elm_progressbar_value_set(view->progressbar, 0.0);

}

}

}

static void

_on_ctrl_btn_pressed_cb(void *data, Evas_Object *obj, void *event_info)

{

audio_recorder_view *view = data;

if (view)

{

if (_recorder_is_recording(view))

{

_recorder_stop(view);

_navigate_to_audio_player_view(view);

}

else

{

_recorder_start(view);

}

}

}